An erotic roleplay chatbot and AI image creation platform called Secret Desires left millions of user-uploaded photos exposed and available to the public. The databases included nearly two million photos and videos, including many photos of completely random people with very little digital footprint.

The exposed data shows how many people use AI roleplay apps that allow face-swapping features: to create nonconsensual sexual imagery of everyone, from the most famous entertainers in the world to women who are not public figures in any way. In addition to the real photo inputs, the exposed data includes AI-generated outputs, which are mostly sexual and often incredibly graphic. Unlike “nudify” apps that generate nude images of real people, these images are putting people into AI-generated videos of hardcore sexual scenarios.

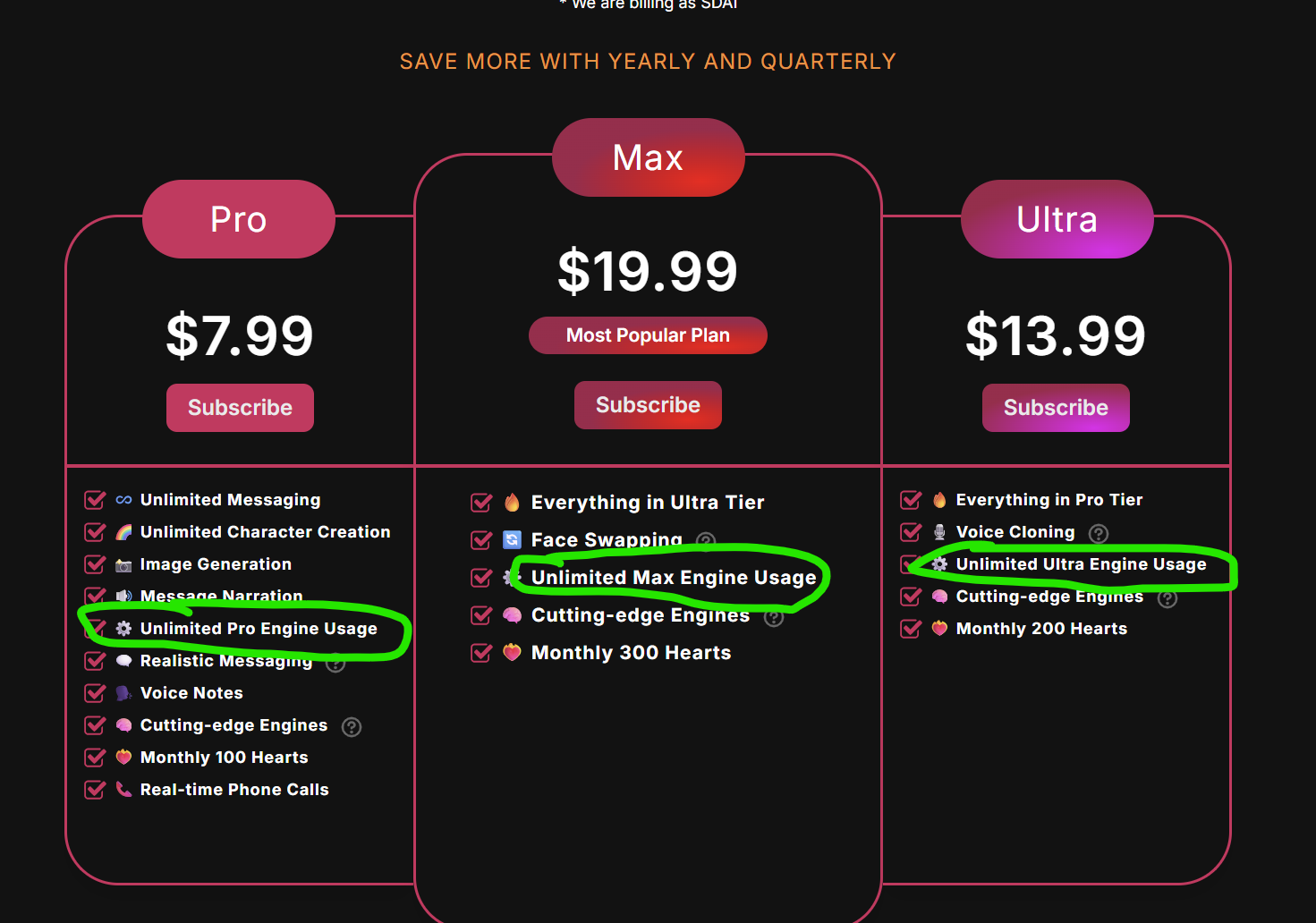

Secret Desires is a browser-based platform similar to Character.ai or Meta’s AI avatar creation tool, which generates personalized chatbots and images based on user prompting. Earlier this year, as part of its paid subscriptions that range from $7.99 to $19.99 a month, it had a “face swapping” feature that let users upload images of real people to put them in sexually explicit AI generated images and videos. These uploads, viewed by 404 Media, are a large part of what’s been exposed publicly, and based on the dates of the files, they were potentially exposed for months.

About an hour after 404 Media contacted Secret Desires on Monday to alert the company to the exposed containers and ask for comment, the files became inaccessible. Secret Desires and CEO of its parent company Playhouse Media Jack Simmons did not respond to my questions, however, including why these containers weren’t secure and how long they were exposed.

The platform was storing links to images and videos in unsecured Microsoft Azure Blob containers, where anyone could access XML files containing links to the images and go through the data inside. A container labeled “removed images” contained around 930,000 images, many of recognizable celebrities and very young looking women; a container named “faceswap” contained 50,000 images; and one named “live photos,” referring to short AI-generated videos, contained 220,000 videos. A number of the images are duplicates with different file names, or are of the same person from different angles or cropping of the photos, but in total there were nearly 1.8 million individual files in the containers viewed by 404 Media.

The photos in the removed images and faceswap datasets are overwhelmingly real photos (meaning, not AI generated) of women, including adult performers, influencers, and celebrities, but also photos of women who are definitely not famous. The datasets also include many photos that look like they were taken from women’s social media profiles, like selfies taken in bedrooms or smiling profile photos.

In the faceswap container, I found a file photo of a state representative speaking in public, photos where women took mirror selfies seemingly years ago with flip phones and Blackberries, screenshots of selfies from Snapchat, a photo of a woman posing with her university degree and one of a yearbook photo. Some of the file names include full first and last names of the women pictured. These and many more photos are in the exposed files alongside stolen images from adult content creators’ videos and websites and screenshots of actors from films. Their presence in this container means someone was uploading their photos to the Secret Desires face-swapping feature—likely to make explicit images of them, as that’s what the platform advertises itself as being built for, and because a large amount of the exposed content is sexual imagery.

Some of the faces in the faceswap containers are recognizable in the generations in the “live photos” container, which appears to be outputs generated by Secret Desires and are almost entirely hardcore pornographic AI-generated videos. In this container, multiple videos feature extremely young-looking people having sex.

In early 2025, Secret Desires removed its face-swapping feature. The most recent date in the faceswap files is April 2025. This tracks with Reddit comments from the same time, where users complained that Secret Desires “dropped” the face swapping feature. “I canceled my membership to SecretDesires when they dropped the Faceswap. Do you know if there’s another site comparable? Secret Desires was amazing for image generation,” one user said in a thread about looking for alternatives to the platform. “I was part of the beta testing and the faceswop was great. I was able to upload pictures of my wife and it generated a pretty close,” another replied. “Shame they got rid of it.”

In the Secret Desires Discord channel, where people discuss how they’re using the app, users noticed that the platform still listed “face swapping” as a paid feature as of November 3. As of writing, on November 11, face swapping isn’t listed in the subscription features anymore. Secret Desires still advertises itself as a “spicy chatting” platform where you can make your own personalized AI companion, and it has a voice cloning mode, where users can upload an audio file of someone speaking to clone their voice in audio chat modes.

On its site, Secret Desires says it uses end-to-end encryption to secure communications from users: “All your communications—including messages, voice calls, and image exchanges—are encrypted both at rest and in transit using industry-leading encryption standards. This ensures that only you have access to your conversations.” It also says stores data securely: “Your data is securely stored on protected servers with stringent access controls. We employ advanced security protocols to safeguard your information against unauthorized access.”

The prompts exposed by some of the file names are also telling of how some people use Secret Desires. Several prompts in the faceswap container, visible as file names, showed users’ “secret desire” was to generate images of underage girls: “17-year-old, high school junior, perfect intricate detail innocent face,” several prompts said, along with names of young female celebrities. We know from hacks of other “AI girlfriend” platforms that this is a popular demand of these tools; Secret Desires specifically says on its terms of use that it forbids generating underage images.

Secret Desire runs advertisements on Youtube where it markets the platform’s ability to create sexualized versions of real people you encounter in the world. “AI girls never say no,” an AI-generated woman says in one of Secret Desire’s YouTube Shorts. “I can look like your favorite celebrity. That girl from the gym. Your dream anime character or anyone else you fantasize about? I can do everything for you.” Most of Secret Desires’ ads on YouTube are about giving up on real-life connections and dating apps in favor of getting an AI girlfriend. “What if she could be everything you imagined? Shape her style, her personality, and create the perfect connection just for you,” one says. Other ads proclaim that in an ideal reality, your therapist, best friend, and romantic partner could all be AI. Most of Secret Desires’ marketing features young, lonely men as the users.

We know from years of research into face-swapping apps, AI companion apps, and erotic roleplay platforms that there is a real demand for these tools, and a risk that they’ll be used by stalkers and abusers for making images of exes, acquaintances, and random women they want to see nude or having sex. They’re accessible and advertised all over social media, and that children find these platforms easily and use them to create child sexual abuse material of their classmates. When people make sexually explicit deepfakes of others without their consent, the aftermath for their targets is often devastating; it impacts their careers, their self-confidence, and in some cases, their physical safety. Because Secret Desires left this data in the open and mishandled its users’ data, we have a clear look at how people use generative AI to sexually fantasize about the women around them, whether those women know their photos are being used or not.